As an open-source cluster management platform, the rise in popularity of Kubernetes is closely linked to the spread of Cloud services. According to a Google Tweet in 2018, around 54% of Fortune 100 companies use Kubernetes. It simplifies software development and IT infrastructures by managing containers running software applications.

oneclick™’s Tech Stack runs on a scaling Kubernetes cluster. Read on for a closer look at what is so incredible about Kubernetes and why it has revolutionised the software world.

What is Kubernetes or K8s?

Kubernetes is a term derived from ancient Greek meaning helmsman or pilot. Kubernetes is sometimes abbreviated as K8s, by representing the 8 letters following the K with the numeral.

Imagine the developer telling the helmsman, “I want a scalable app, like a digital workstation, but I don’t want to have to consider the infrastructure”, this is the role that Kubernetes plays. Kubernetes is a portable, extendable, and scalable open-source container cluster management solution. K8s fully automates provisioning, scaling, and management of containerised applications. It synchronises computer, network, and storage infrastructure according to user workloads.

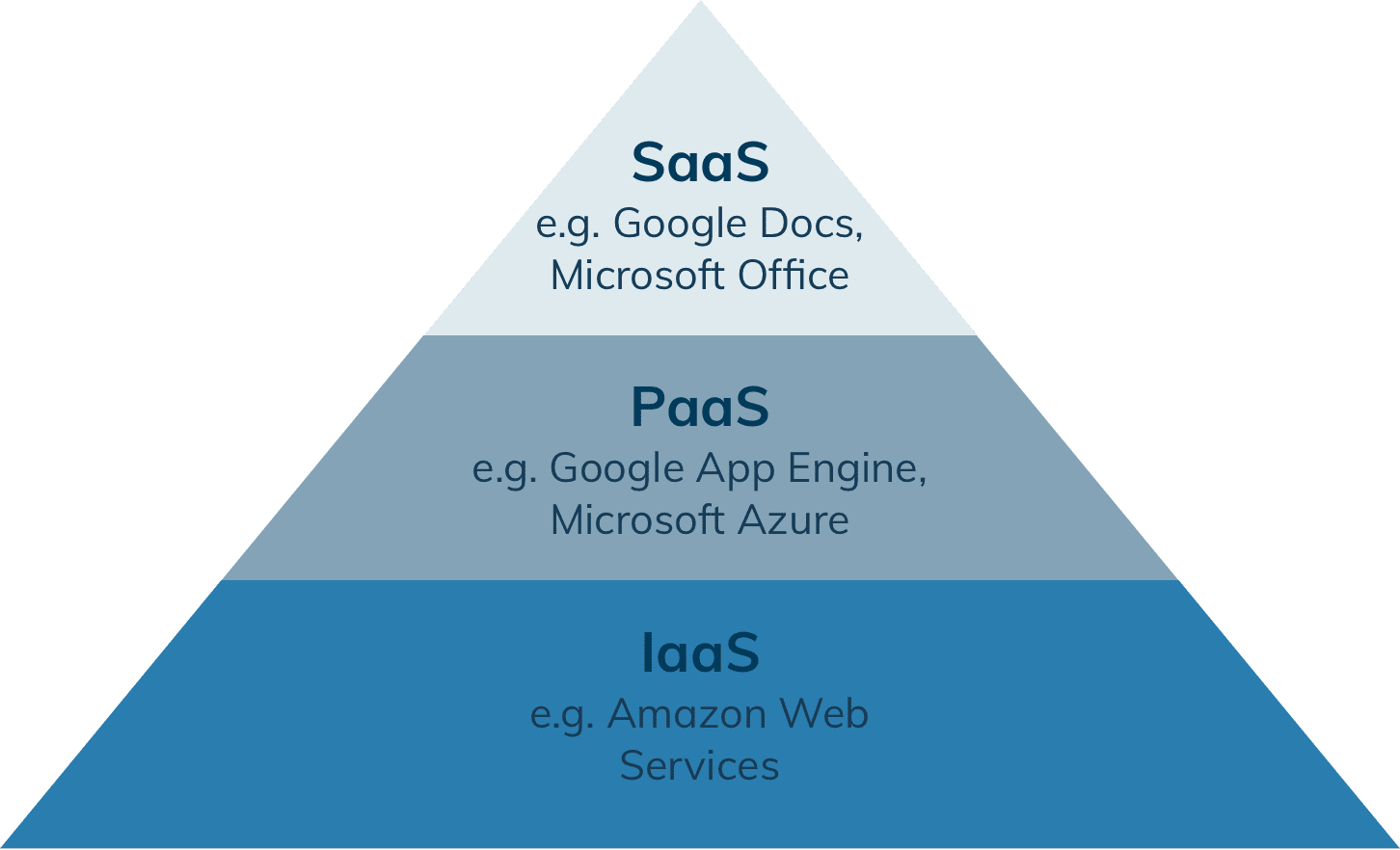

Kubernetes leverages the simplicity of Platform as a Service (PaaS) when used on the Cloud. It utilises the flexibility of Infrastructure as a Service (IaaS) and enables portability and simplified scaling; empowering infrastructure vendors to provision robust Software as a Service (Saas) business models.

Kubernetes is a large, rapidly growing ecosystem and Kubernetes services, support, and tools are well established. Kubernetes is not just an orchestration system; it eliminates the need for orchestration.

Kubernetes manages multiple containers, with each container or its image being small and fast. One application or service can be housed in a container or its image. This 1:1 relationship allows the benefits of containers to be fully exploited. Fixed container images can be created at build/release time rather than deployment time, as each application does not need to be coordinated within the rest of the application stack and is not coupled via the production infrastructure environment.

The creation of container images at the moment of production or release, in turn, ensures a continuous environment from development to production.

Each container holds the entire source code and all dependencies of one service, when demand for the service increases, the number of containers may be increased. Without containers, huge amounts of computing power would have to be kept permanently available to stream video content to the predicted number of users. With containers, the available computing capacity can be applied optimally by starting or stopping additional containers, as required. To do this, Kubernetes searches for a web server that still has capacity, duplicates the required service there and then automatically releases this computing capacity after the task has been completed. Even if a web server fails, active containers are automatically moved to other web servers in the data center.

Kubernetes is an internationally recognised standard, and many container-based companies rely on this technology. The biggest challenge to applying Kubernetes is the complexity of creating, operating, and updating a Kubernetes cluster. The various components that provide the flexibility and ease of use of Kubernetes must be managed to be highly available and scalable.

How did the project evolve?

Kubernetes is Google’s brainchild. Google’s experience of running large-scale production workloads, coupled with the best ideas, practices, and tools in the developer community, led Google’s programmers to found the Borg project in 2012. The name comes from the science fiction series Star Trek and titles a fictional people without a hierarchical structure. Kubernetes evolved from the Google Borg project, which is also based on Linux container technology.

Borg provided a decisive competitive advantage for Google, allowing Google to deliver massive infrastructure landscapes at a significantly lower cost. It has optimised the utilization of server hardware fivefold.

The internal Borg project then evolved to become the open-source Kubernetes. At the Open-source Summit in Vancouver, the Cloud Native Computing Foundation (CNCF) announced that Google would transfer the cloud resources for container orchestration of Kubernetes to the Foundation. In 2014, the Kubernetes project was made available as an open-source project and has since been available as open-source software under the umbrella of the Cloud Native Computing Foundation.

What makes Kubernetes so powerful?

Development teams can work more independently and with greater agility thanks to the flexibility that containers and their orchestration allow with Kubernetes. New functions, services, and fixes can be rolled out faster without downtime. A cloud application may be thought of as a container ship providing the containers and the ship. Kubernetes ensures that containers are transported to the right position at the right time.

Prior to containerisation, applications were made available by installing them on a host. This had the disadvantage that the executable files, configurations, libraries, and lifecycles of the applications were networked with each other and with the host operating system. While it was possible to produce immutable VM images to make predictable rollouts and rollbacks achievable, VMs provided an abstraction of a machine, they are tied to their server and setup with an Operating System (OS) and are, therefore, not easily portable.

Containers provide an abstraction at the OS level, a level above that of hardware virtualisation. Each container is encapsulated from others and from the host machine. They are easier to develop than VMs, because they are separated from the underlying infrastructure and host file system, they are persistently platform-independent and portable across clouds and operating system distributions.

Although Kubernetes itself already offers a lot of functionality, application-specific workflows in particular can be adapted to speed up development. Kubernetes thus also functions as a platform for building an ecosystem and tools. We at oneclick™ chose to apply Kubernetes as the foundation for our platform.

In addition to the advantages described above, Kubernetes also has other excellent design features:

- Health check and self-repair: Faulty containers are automatically reopened. If an entire node fails, the containers within it are redistributed and if containers do not respond to a “Health Check”, Kubernetes automatically restarts them. In addition, the open-source container cluster administration offers auto-placement and auto replication.

- Service, Discovery and Load Balancing: Kubernetes assigns a single DNS name to containers with a single IP address to container groups and can perform load balancing under this identity.

- Pods: A Pod is a collection of one or more containers that are used in each respective node. All containers in the Pod share the IP address, IPC, hostname, and additional resources – such as shared storage. In addition, each Pod executes at least one Kubelet and one ContainerRuntime.

- Kubelet: The Kubelet is an agent of the master. This is executed on each node and is responsible for the connection between master and node.

How Kubernetes works

The Kubernetes system architecture is elegant in its design. Kubernetes orchestrates all aspects of container management and automates all necessary processes: setup, operation, and scaling (up/down). The containers can be orchestrated via selected machines (physical and virtualised computers), which are then constantly monitored to guarantee that they meet their customised requirements.

Instances are provided according to load-balancing requirements, and if they fail or crash, Kubernetes reloads them. Even if a complete work process fails or is unresponsive, Kubernetes will re-orchestrate it from a new node.

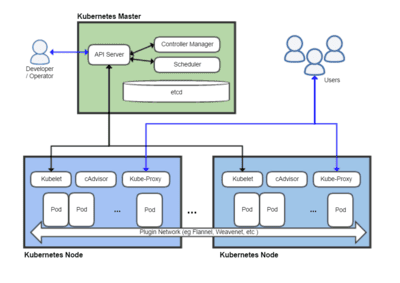

The master-slave architecture that underlies Kubernetes allows the master component to assign the nodes on which the containers run. The Kubernetes architecture includes:

- The Kubernet master – the central control element that distributes and administers the containers to the nodes, whereby high availability can be achieved by distributing containers to various masters.

- Nodes – a VM or a physical web server. The nodes run the pods.

- Pods are the smallest deployable unit and contain one or more containers that share allocated resources.

- The Etcd stores the configurations of the Kubernet cluster via the API web server.

- The API web server provides all important information for the Etcd and interacts via REST API interfaces, for example, with the services of the Kubernetes Cluster.

- The kube-scheduler monitors and administrates the load of the nodes by determining which node a pod starts as an independent component with the help of the system resources.

- Controller: The controller contains all control mechanisms and is another important component for monitoring. It interacts with the API web server to read and write all statuses.

Kubernetes and Cloud

Thanks to Kubernetes, companies can use container-based applications on all major cloud platforms. The majority shareholders in the global cloud market are Amazon Webservices (AWS), the Google Cloud Platform (GCP) and Microsoft Azure.

Are you interested in learning more?

If you are considering how containerisation can improve your service model, oneclick™ is here to help. Contact us to learn more about utilizing containers on the Cloud to give your clients a truly global and latency-free experience of your services.

If you would like to learn more about the containers managed by Kubernetes, read on for an introduction to Docker.

Further information can be found using the following sources:

- https://en.wikipedia.org/wiki/Kubernetes

- https://kubernetes.io/de/docs/concepts/overview/what-is-kubernetes/

- https://kubernetes.io/docs/concepts/cluster-administration/cloud-providers/

- https://github.com/fluent/fluent-bit-docs/blob/master/filter/kubernetes.md

- https://cloud.google.com/kubernetes-engine/docs/concepts/cluster-architecture